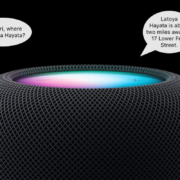

Be Alert for Deepfake Phishing Scams

Phishing scams have entered a new, AI-powered phase and can now convincingly mimic real people. Attackers can generate fake voice or video deepfakes to impersonate CEOs authorizing urgent payments, IT staff requesting access, or family members needing help. If you receive a voice or video call from someone you know at an unknown number who urges you to reveal confidential information or send money immediately, slow down, check for telltale signs, and verify before acting. Listen for unnatural pauses, overly smooth phrasing, or odd emotional timing. Visually, look for inconsistent lighting or shadows, artifacts around the hairline, ears, or teeth—or anything that seems “off,” especially around the mouth and eyes when the person moves. For verification, ask for a detail that only they would know. If you’re at all unsure whether the call is legitimate, hang up and contact them—or someone else who will know more—through a separate, trusted channel. A few seconds of skepticism can prevent a costly mistake.

(Featured image by iStock.com/Tero Vesalainen; article image by iStock.com/Boris023)

Social Media: Phishing has a new face—literally. Scammers can now use AI tools to fake voices and videos that look real. Before you act on an “urgent” request, look for audio or visual clues and verify through another channel.